Linux Zero Copy

Purpose

The purpose of this document is to highlight some of the aspects of various Linux subsystems (virtual memory, virtual file system) and how they relate to storage subsystems (file systems and block device drivers). Specifically, the goal is to provide enough information to intelligently design inter-process communication and logging software.

Virtual Memory

Much of this section is summarized from the very good introduction on The Linux Documentation Project.

The virtual memory subsystem presents a view of system memory that is larger than the physical memory available. In addition it provides a number of other features:

- Large address spaces

- Process isolation and protection

- Memory mapping of files or devices

- Allocation / division of physical memory

- Shared memory

Abstract Model

The address space of a process is the set of all addresses representable in a pointer (i.e. a 32 or 64 bit word). In userspace, all memory accesses are made against virtual addresses. A virtual address within the process address space is translated to physical addresses by lookup tables managed by the kernel. In order to keep these lookup tables efficient, they operate on fixed sized regions of memory, one unit of which is called a page. The lookup tables are called page tables. On x86 and arm the page size is 4kb (4096 bytes).

Virtual addresses are composed of two fields: frame number and offset. The frame number is the table entry used to lookup the physical page and the offset is where within the physical page the address refers to.

Abstract model of Virtual to Physical address mapping

The page table keeps track of some metadata associated with each mapping:

- Is this page table entry valid

- The physical page number this entry points to

- Access control: read, write, executable

The physical processor is able to use the page table that is managed by the kernel. When a program attempts to access memory the processor will lookup the physical page from the table and (if valid) complete the access operation. If the table entry is not valid, it notifies the kernel by issuing a page fault.

Demand Paging

Given that each process has it's own address space the amount of addressable virtual memory in a multiprocess system can be much greater than the physical memory. Linux implements a number of strategies to efficiently utilize this limited physical memory. One of those strategies is demand paging of process images.

When a program (in ELF format) is first started, the ELF interpreter maps the program file into memory at which point we refer to it as the process image. This mapping is initially unresolved (except for the initial portion) in the page table and physical memory is not yet dedicated to the process image. Instead, pages are filled on demand in response to page faults encountered as the program image is accessed.

When the processor encounters a memory access in the program flow (it may need to fetch the next instruction, jump to a different instruction, or fetch or write to memory) it resolves the virtual address to a physical address through the processes page table. As mentioned above, if there is no entry in the process page table for that virtual address or if the entry is invalid the processor issues a page fault and control of the processor moves to the kernel.

For example, in the figure above there is no entry in process 1's page table for virtual page frame number 2 and so if process 1 attempts to read from an address within virtual page frame number 2 the processor cannot translate the address into a physical one.

If the virtual address of the access that induced the page fault is invalid, then the program is trying to read or write to an address that it has not configured. This is known as a segmentation fault. The kernel will signal the program with SIGSEGV and the program counter will jump to the signal handler (usually resulting in process termination).

If the virtual address of the access is valid but there is no physical page backing it, the kernel must assign a physical page to that virtual page, and then fill that page with the program contents read off from disk. In general this is a time consuming process and so this is an opportunity for the scheduler to service some other process on the processor that issued the fault. Once the fetched page is copied to physical memory and an entry is added to the page table, the process is restarted at the faulting instruction. This time the virtual memory address is successfully translated to a physical address by the processor and the program continues.

Linux Page Cache

In general, all reads and writes to real files in Linux go through the Linux page cache. This is a fundamental aspect of Linux performance and has far-reaching implications, including some that we may exploit for optimization.

The Linux page cache is an in-memory generally write-back/read-through cache for file data. When data is read from a regular file it is first moved to page cache, and then made available through the filesystem driver. When data is written to a file, it is first copied to the page cache, and then flushed out to storage at some point later.

The purpose of the page cache is to speed up access to files on storage media. Files are generally read in a page at a time and these pages are stored in the page cache.

The Linux Page Cache

Each file in Linux is identified by an data structure called an inode, and in Linux pretty much everything is a file (and so has an inode associated with it). When a page from a memory mapped file is read, it is processed through the page cache. If the cache is hot the page is served out of the cache. Otherwise a physical page is allocated and the filesystem or storage driver is informed to fill the page.

Pages filled in the page cache generally stay resident until something other demand pushes them out. This is of particular note because, in general, most of the physical memory is in use on Linux (by at least the page cache).

Recovering physical memory

The Linux kernel attempts to keep a pool of physical memory available for future use. The configurable behavior of this pool has two relative parameters:

- high water mark

- low water mark

If the amount of physical memory available is greater than the high water mark then the kernel does nothing at all. Anything currently paged into the page cache is left there indefinitely.

Between the high water mark and low water mark the kernel begins to take action. It will start to evict pages out of physical memory. Below the low water mark the kernel gets more aggressive. The difference between the two is the number of pages the kernel will try to free during each attempt.

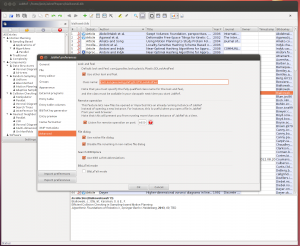

kswapd decision points. (a): with lots of physical memory available, the swap daemon doesn't do anything. (b): when available memory drops below the high water mark, the swap daemon attempts to free a couple of pages per timer tick. (c): when available memory drops below the low water mark, the daemon attempts to free more pages per tick.

The whole process is done by the a kernel thread called the kernel swap daemon (kswapd). It is serviced on a timer and at each service it looks at the number of free pages and takes action.

When the swap daemon decides to try and free memory it first looks for page cache entries that can be discarded. It does this by walking around the page cache and inspecting some fixed number of pages at each iteration (clock algorithm), looking for any pages that can be discarded. A page is discardable if the page is not mapped into any process address space.

If the swap daemon doesn't recover enough pages through discarding of disk cache it will then attempt to swap out or discard mapped pages. It looks only at processes that are swappable (some are not), and that have swappable pages. A page can be locked removing it from the candidate pool of swappable pages. If disk swap is enabled, the swap daemon will consider swapping it out to swap file only if it cannot be recovered in another way. Demand-paged program storage, for instance, can be discarded without swapping because the data can be read back from disk if it's needed again later. The swap daemon will preferentially page out old pages versus those that were used recently.

Virtual File System

Again, much of this section is summarized from the very good introduction on The Linux Documentation Project

The Linux Virtual File system (VFS) allows the operating system to interact with heterogeneous storage media utilizing a wide array of different filesystems. Filesystem drivers in Linux translate VFS interactions to filesystem specific interactions with the underlying storage media.

Schematic of the Linux virtual filesystem and caching.

The basic building block of the Linux VFS is the inode. Every file in the filesystem is described by one and only one inode. They describe both the contents and topology of the VFS. inodes and directory contents are cached in the page cache like file contents, though these cache entries are not 1-1 mappings with data on the block-device of the storage medium. Rather, they are translated by the filesystem driver when they are read in. Never-the-less, they still are generally discardable cache entries as the data can always be restored by reading back the relevant blocks of the underlying storage medium (through the translation layer of the filesystem driver).

A filesystem is basically two things:

- a specification for how file contents, metadata, and directory information are laid out on a continuous storage medium

- a driver software which interprets this specification and provides a consistent API for the kernel to interact with.

Storage media are block devices which are represented in Linux as files. Like other files they get an inode representation in the VFS and reads and writes to these files are cached in the page cache. When the filesystem driver reads data from the block device to, for instance, enumerate inodes or directory entries, the entire block is pulled into the page cache and then the exact data needed is read, interpreted, and used to fill inode and directory structures. These structures are themselves stored within pages of memory pulled from the page cache pool and are subject to cache rules.

Normally read() and write() act similarly. When the userspace read()s data from a file, the filesystem driver copies data from the block device into the userspace buffer for the read.

Memory-mapped files are (potentially) dealt with a little differently. If the file contents are stored page-aligned and byte-for-byte on the block device (they are for a sane filesystem) then the filesystem driver can implement an optimization informing the kernel to map the existing cache pages directly into the process page table.

Process Memory

The fundamental API with which a userspace program interacts with the kernel virtual memory subsystem is through the mmap(2) system call (and it's glibc wrapper function):

void *mmap(void *addr, size_t length, int prot, int flags, int fd, off_t offset); int munmap(void *addr, size_t length);

From the Linux manual:

mmap() creates a new mapping in the virtual address space of the calling process. The starting address for the new mapping is specified in addr. The length argument specifies the length of the mapping (which must be greater than 0).

mmap() can be used to map the contents of a file into the process address space. The process can then read from or write to the file by simply dereferencing a pointer. In particular:

The contents of a file mapping (as opposed to an anonymous mapping; see MAP_ANONYMOUS below), are initialized using length bytes starting at offset in the file (or other object) referred to by the file descriptor fd. offset must be a multiple of the page size as returned by sysconf(_SC_PAGE_SIZE).

Of particular note is how program instructions are accessed, which was introduced previously in the discussion on the virtual memory subsystem. Consider the execution of a program in the Executable and Linker File (ELF) format. When a program is executed with the exec(3) family of functions (and the underlying system call) Linux will replace the current process image with the system interpreter by mapping it into process address space and moving the program counter to first address in the interpreter program. The interpreter then maps the ELF file into memory, parses out some of the metadata, and then jumps to the start of the program in the mapped file. We often refer to the ELF file (as mapped into memory) as the program image. When the interpreter maps this file into memory it does so as an executable read-only mapping.

Calling mmap() with flags |= MAP_ANONYMOUS is how a process maps general purpose physical memory into it's address space. Specifically:

The mapping is not backed by any file; its contents are initialized to zero. The fd argument is ignored; however, some implementations require fd to be -1 if MAP_ANONYMOUS (or MAP_ANON) is specified, and portable applications should ensure this. The offset argument should be zero. The use of MAP_ANONYMOUS in conjunction with MAP_SHARED is supported on Linux only since kernel 2.4.

This is the underlying mechanism of how memory allocators (i.e. malloc()) work. They call mmap() to map physical pages into the process address space, then they add additional metadata and various global data structures to provide a higher level interface on top of that. Note that the glibc implementation of malloc() never calls munmap(). Any free()ed memory is kept mapped for reuse in a later malloc() call.

Calling mmap() with a file descriptor of an open file handle will initialize the map with the contents of the file, starting at offset. Calling with flags |= MAP_SHARED means that updates to the mapping are visible to other processes with the same region mapped into their address space, and (in the case of a file mapping), writes the the map are carried through to the underlying file. Specifically:

MAP_SHARED Share this mapping. Updates to the mapping are visible to other processes mapping the same region, and (in the case of file-backed mappings) are carried through to the underlying file. (To precisely control when updates are carried through to the underlying file requires the use of msync(2).)

A shared mapping (whether anonymous or file-backed) allows the physical page to reside in the page table for more than one process.

A note on O_DIRECT

In the above zerocopy discussion we eliminate additional copies by taking advantage of the existing page cache copy of the data. Another option that can reduce data copies during write is to write to a file opened with O_DIRECT. The exact semantics of O_DIRECT depends on the implementation of the filesystem driver, but in general:

O_DIRECT (since Linux 2.4.10) Try to minimize cache effects of the I/O to and from this file. In general this will degrade performance, but it is useful in special situations, such as when applications do their own caching. File I/O is done directly to/from user- space buffers. The O_DIRECT flag on its own makes an effort to transfer data synchronously, but does not give the guarantees of the O_SYNC flag that data and necessary metadata are transferred. To guarantee synchronous I/O, O_SYNC must be used in addition to O_DIRECT.

Usually what this means is that a write() does not copy data to page cache. Instead, the file system driver will immediately attempt to flush data to the underlying storage medium. As mentioned in the manual quote above, in general this will degrade performance. Specifically, the filesystem and storage drivers may be forced to service the write() immediately, rather than interleaving the data flush with other system operations in an optimal way.

Filesystem Reliability

While the Linux page cache is a significant boon to performance in general, one penalty of this system is the reliability of storage on an unclean shutdown or sudden loss of power situation. Any number filesystem changes may appear to a userspace program to have been written to disk, when in fact they have only been written to disk cache. This includes:

- new files

- deleted file

- new data written to a file

- file changed size

Consider specifically the case of the ext2 filesystem, for which the inode structure is illustrated below.

EXT2 filesystem inode structure and how it points to file data.

The inode structure forms the root of an unbalanced tree of data blocks containing the actual file data. For small files all the data blocks are referenced directly in the inode data structure. For larger file, the first segment is referenced directly by the inode, and then for later segments the inode points to another data structure, which then points to the data blocks.

Now consider a process which performs following steps (indicated with their libc function calls):

- open() a new file (i.e. with flags |= O_CREAT)

- periodically write() a chunk of data to the file

- close the file

Let's walk through the sequence of filesystem operations that will occur underlying the hood.

- When the file is created, a new inode structure is allocated for the file, then a directory entry is added to the parent directory file pointing to this new inode.

- Each time we write to the file, the filesystem driver will first check to see if there is an unfilled block already allocated to hold the data. If not it will find a free block and add a pointer to it in the inode and update it's accounting of the file size. Then it will fill that block with data and update the file modification time.

- As the file gets larger, we'll overflow the direct blocks and the filesystem driver will need to allocate an indirect block structure, add a pointer to the inode structure, and then allocate a block and add a pointer to the indirection structure.

Each of these modifications is essentially a write to some block of the underlying block device. Each of these writes are subject to page caching and so the actual write really just happens in memory. At some point in the future those dirty pages are flushed out to disk and persisted. In an unclean shutdown or sudden power loss any write waiting in page cache to be flushed will not be persisted to disk. This can include:

- file data

- inode data

- indirect block

- entry data

- directory content data.

Modern journaled filesystems (like ext4) deal with this situation by recording in a "journal" all filesystem modifications in-sequence. It ensures these journal entries are flushed to disk before the corresponding data changes are, so that any outstanding changes can be completed by the filesystem driver the next time it is started.

However, it is important to recognize the nature of these interactions and design filesystem access patterns to best account for what will happen on a sudden power loss. In the example above each incremental write may lead to a significant change of filesystem metadata. A sudden power loss may lose those metadata changes, those data changes, or both. A better design for such a process would be to open the file for writing, and then pre-truncate it to the desired size (if possible) or to some reasonably conservative estimate of it's size. This way the filesystem updates all it's metadata and storage allocations up front and it doesn't have to do any of those updates on the fly. A sudden power loss might still lose data of course, but it will be limited to only the unflushed pages in the page cache and not due to missing filesystem metadata or pointers.

A Note on Memory Usage Metrics

When it comes to system-level performance monitoring there are a couple of top level metrics that are often tracked:

- CPU utilization

- GPU (or other co-processor) utilization

- memory utilization

Given the discussion of the page cache and virtual memory systems above, we can now discuss how to measure and meaningfully understand memory utilization on a Linux system. Most userspace tools (like free, for instance) get their information from /proc/meminfo, a virtual file served up from the kernel containing information about memory usage. Due to the Linux page cache and demand-paged memory access, nearly all physical memory (up to the kswapd low-watermark) on a Linux system will be in use. When we talk about "free" memory on a Linux system we are usually referring to the MemAvailable entry of /proc/meminfo.

MemAvailable %lu (since Linux 3.14) An estimate of how much memory is available for start‐ ing new applications, without swapping.

Pages that are reclaimable without swapping include unlocked pages of both the disk cache and buffer cache, including demand-paged file data which is currently resident. Linux's accounting for this value, however, is inexact. It may not know whether a page memory can really be reclaimed until the swap daemon gets around to actually trying to free it. In addition, the fact that this amount of memory can be reclaimed doesn't mean that it can be reclaimed and repurposed instantaneously, and some actions may fail due to low memory if the swap daemon can't find enough pages to release fast enough.